In the rapidly evolving landscape of artificial intelligence (AI), establishing a robust AI Policy is crucial for organizations aiming to harness the power of AI responsibly and effectively. The international ISO 42001 AI Management System standard provides a structured framework to help organizations develop a comprehensive AI Policy for the responsible use and creation of AI.

Understanding ISO 42001

The ISO 42001 standard outlines a set of requirements and guidelines for establishing, implementing, maintaining, and continuously improving an AI Management System. At its core, it emphasizes the need for an AI Policy that aligns with the organization’s goals, legal requirements, and ethical considerations.

Step 1: Establish the AI Policy

Top Management Involvement: The development of an AI Policy should be initiated by top management, ensuring it:

- Reflects the organization’s purpose

- Provides a framework for setting AI objectives

- Commits to compliance with applicable requirements and to continual improvement

Documentation and Accessibility: The AI Policy must be documented and made accessible, ensuring it is communicated within the organization and available to relevant external parties as appropriate.

Step 2: Inform the AI Policy Through Organizational Insights

Incorporate Business Strategy and Culture: Your AI policy should reflect the organization’s business strategy, values, and cultural context, taking into account the level of risk the organization is willing to accept.

Consider Legal and Risk Factors: It should also account for the legal landscape, the inherent risks of AI systems, and the organization’s risk environment, considering the potential impact on stakeholders.

Step 3: Define Principles and Processes

Principles: Outline clear principles guiding AI-related activities, ensuring they promote ethical use, transparency, and accountability.

Processes for Deviations: Establish processes to handle deviations from the policy, ensuring exceptions are managed effectively.

Step 4: Address Topic-Specific Aspects

Cross-References to Other Policies: Your AI Policy should provide additional guidance on AI-specific topics such as resource management, impact assessments, and system development, and detail how these aspects intersect with other organizational policies.

Alignment and Control: Ensure the AI Policy is aligned with other organizational policies, identifying areas where AI objectives may affect or be affected by other policies.

Step 5: Implement and Control

Analysis and Intersection: Perform a thorough analysis to identify intersections between AI initiatives and other domains such as quality, security, safety, and privacy. Update existing policies or incorporate provisions in the AI Policy as needed.

Step 6: Review and Improve

Scheduled Reviews: The AI policy should undergo regular reviews to assess its effectiveness and suitability. These reviews should consider changes in the organizational environment, legal conditions, and technological advancements.

Responsibility and Evaluation: Assign a management-approved role responsible for the policy’s development, review, and evaluation. This role should also explore opportunities for policy improvement.

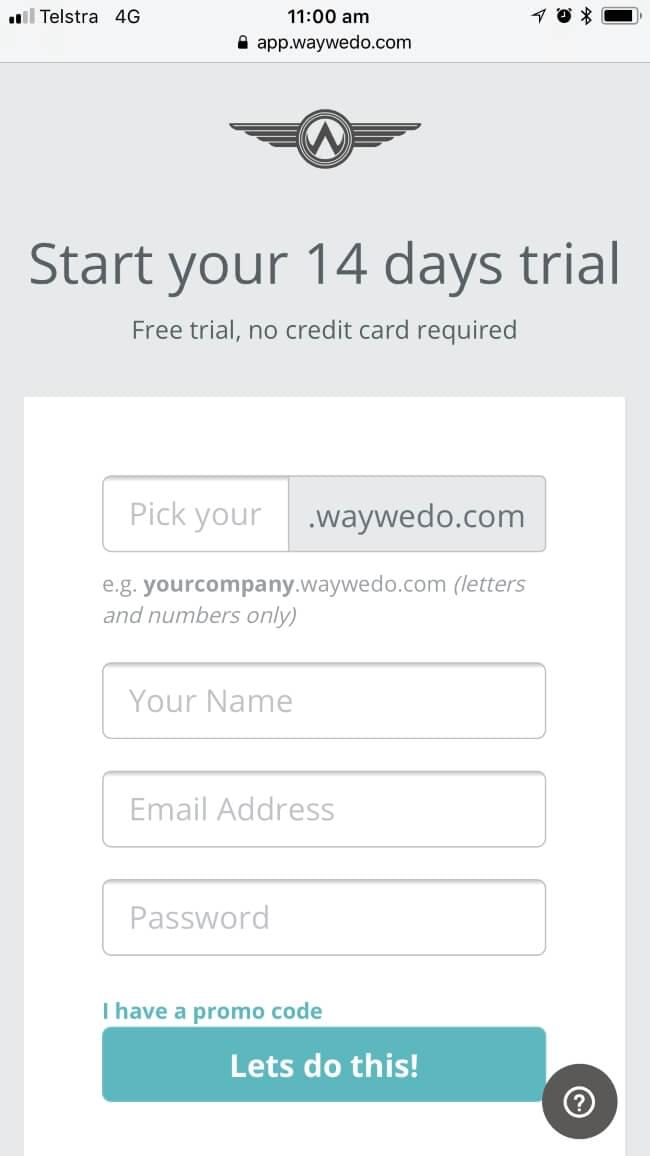

Use these 6 steps to create your own AI Policy with the assistance of Way We Do AI.

Don’t have an account, sign up to one today…

10 Principles to consider for your AI Policy

Principles or policy statements are an integral part of any policy document. Here are 10 principles for you to consider for yours…

- Ethical Principles at the Core

At the heart of any AI Policy should be a set of ethical principles that guide the development and use of AI technologies. These principles, including fairness, accountability, transparency, and respect for privacy, serve as the moral compass for organizations, ensuring that AI systems are designed and deployed in a manner that upholds human dignity and rights. - Transparency and Explainability

AI systems, often perceived as ‘black boxes’, can lead to trust issues among users and stakeholders. An effective AI Policy must emphasize the importance of transparency and explainability, ensuring that the workings of AI systems are understandable and that decisions made by AI can be explained in human terms. This not only builds trust but also facilitates regulatory compliance and accountability. - Privacy and Data Protection

In an era where data is the new oil, safeguarding personal and sensitive information is crucial. An AI Policy must include stringent data governance frameworks that protect privacy and ensure that data collection, storage, and processing are done ethically and in compliance with global data protection regulations such as GDPR. - Safety and Security

As AI systems become more integrated into critical infrastructure and everyday applications, ensuring their safety and security is paramount. AI policies should mandate rigorous testing and validation of AI systems to prevent harm and ensure that they are resilient against cyber threats and operational failures. - Accountability and Oversight

Who is responsible when an AI system makes a mistake? An effective AI Policy must address accountability, delineating clear guidelines on liability and establishing mechanisms for redress. Furthermore, continuous oversight through AI governance bodies or committees can ensure that AI systems remain aligned with the organization’s ethical principles and policy standards. - Fairness and Non-discrimination

AI systems are only as unbiased as the data they are trained on. To prevent AI from perpetuating or amplifying societal biases, policies must include measures to identify and mitigate bias in AI systems, ensuring that they are fair and do not discriminate against any individual or group. - Human-Centric Approach

AI should augment, not replace, human intelligence and decision-making. Policies should advocate for a human-centric approach to AI, ensuring that AI systems are designed with human oversight and intervention capabilities, thus preventing undue reliance on automated decisions. - Societal and Environmental Well-being

The broader impacts of AI on society and the environment cannot be overlooked. An AI Policy should encourage the development and use of AI in ways that promote societal well-being and environmental sustainability, addressing concerns such as job displacement and energy consumption. - International Collaboration

In our interconnected world, the challenges and opportunities of AI transcend national boundaries. Policies should promote international collaboration to share best practices, harmonize regulatory approaches, and address global challenges such as digital inequality. - Continuous Learning and Adaptation

Finally, an AI Policy should not be static. It must evolve with the advancing technology, changing societal values, and emerging regulatory landscapes. Continuous learning, stakeholder engagement, and policy iteration are essential to remain relevant and effective.

Creating an AI Policy using the ISO 42001 framework is a strategic approach to managing AI initiatives. By following these steps, organizations can ensure their AI Policy is robust, reflective of their values and strategy, and adaptable to changes in the AI landscape. This proactive approach not only aligns with best practices and regulatory requirements but also positions organizations to leverage AI technologies ethically and effectively, fostering innovation and trust among stakeholders.