When artificial intelligence (AI) first arrived on the desks of governance professionals, it was hailed as a timesaving marvel — a quiet assistant that could transcribe conversations, extract action items, and draft meeting minutes before the coffee cooled.

But as Australian companies move toward integrating AI into the heart of board operations, new concerns are rising alongside the efficiencies. The Governance Institute of Australia’s July 2024 issues paper, Artificial Intelligence (AI) and Board Minutes, delivers a sharp message to boards and company secretaries alike: tread carefully.

The stakes are high. Board minutes are not just administrative records; they are legally binding evidence of corporate decisions, processes, and intent. Missteps in how those records are created, stored, or interpreted could open the door to litigation, regulatory scrutiny, or a breakdown in boardroom trust.

So, is AI ready for the boardroom? The answer is nuanced.

A Time-Saver With Undeniable Appeal

At its best, AI is proving to be a valuable ally to governance teams, especially in environments where efficiency is king and human resources are stretched thin.

Tools like Microsoft Copilot, Zoom AI Companion, and Google Meet’s AI-generated notes feature can record conversations, transcribe discussions, summarize meetings, and extract action items — automatically. These capabilities are already transforming how internal team meetings, training sessions, and operational reviews are captured.

“Governance professionals can now generate a first draft of internal meeting notes or a ‘minute shell’ for routine board resolutions within minutes,” says a corporate secretary at a large, listed company in Sydney. “It gives us a running start, so we can spend our time polishing rather than typing from scratch.”

Indeed, the Governance Institute’s paper acknowledges several strengths in using AI for board support:

-

Increased productivity for company secretariats

-

Faster research and synthesis of supporting materials

-

Effective action tracking through meeting summarization tools

-

Potential for assisting with climate-related and ESG data by analyzing complex data sets

But the paper also introduces a cautionary counterpoint: using AI-generated board minutes is not as straightforward as it may seem.

The Integrity Dilemma: Too Much, Too Fast

Perhaps the most fundamental risk identified is confusing transcripts with minutes.

“Board minutes are not a verbatim account,” the Institute stresses. “They are a curated record of decisions and rationale — not every comment, objection, or joke made in the room.”

By their nature, AI-generated transcripts can over-document, leading to minutes that are too detailed, unclear, or legally problematic. When every word is recorded, directors may feel constrained — less likely to speak freely or raise difficult questions if they know their comments could become discoverable in litigation.

There’s also the danger of dual records. If a formal set of board minutes exists alongside an unofficial AI-generated transcript, legal questions arise: Which is the definitive record? What happens if they contradict?

Under Australia’s Section 251A of the Corporations Act 2001, organizations must retain board minutes as the formal record of board proceedings. “Introducing AI-generated content risks undermining that status,” warns the Institute. “It could erode the clarity and legal integrity of the official minute book.”

This principle is echoed internationally. In the U.S., under Delaware law and the Model Business Corporation Act, corporations must keep accurate board minutes that reflect decision-making and fiduciary oversight — not transcripts. In Canada, the CBCA requires minutes to be maintained at the registered office and accessible to directors, serving as key legal records. New Zealand’s Companies Act mandates minutes be kept for at least seven years, capturing board decisions clearly and concisely. In the UK, the Companies Act 2006 requires companies to retain board minutes for 10 years, recording decisions and key reasoning without excessive detail.

Across all these jurisdictions, the principle is consistent: board minutes are not a comprehensive record of discussion but a legally significant summary of decisions and deliberations. The use of AI-generated transcripts or overly detailed summaries risks undermining the legal standing, confidentiality, and authority of the official minute record.

The Judgment Gap

Another issue is human judgment — or rather, AI’s lack of it.

Capturing the tone, nuance, and significance of a discussion is not easy. AI may detect keywords, but it can’t yet discern which director’s concerns carried weight or whether a question signaled a deeper unease.

“There’s a subtle art to good minute-taking,” says a senior governance advisor. “It’s not just about what was said, but what mattered — and why.”

AI also falls short in recognizing the implications of the business judgment rule, which shields directors when decisions are made in good faith, for a proper purpose, and with appropriate diligence. Capturing the essence of that deliberation takes human insight.

Moreover, trust is paramount. Directors rely on minute takers to be impartial, discreet, and accurate. Replacing or even heavily supplementing that role with AI introduces new risks — not just technical, but relational.

Data Privacy, Cybersecurity, and Shadow IT

As organizations begin experimenting with AI, new vulnerabilities emerge — many of them digital.

Using consumer-grade or unsupported AI tools introduces shadow IT into the boardroom, with employees bypassing official systems to access AI capabilities. This increases the risk of cyber breaches, data leaks, and unauthorized data storage — especially if recordings are uploaded to public cloud environments.

Confidential information — including IP, personal data, and strategic decisions — could be inadvertently exposed. Some AI tools store recordings or use input data to train their models, raising concerns over intellectual property and long-term data sovereignty.

Organizations must also grapple with the “black box” problem: AI systems often lack explainability. If something goes wrong — say, a flawed decision is made based on AI-generated minutes — can anyone retrace the logic?

A Smarter Way Forward: Governance by Design

Despite these red flags, the Governance Institute isn’t calling for a ban. Instead, it advocates a deliberate, disciplined approach: one that places governance before convenience.

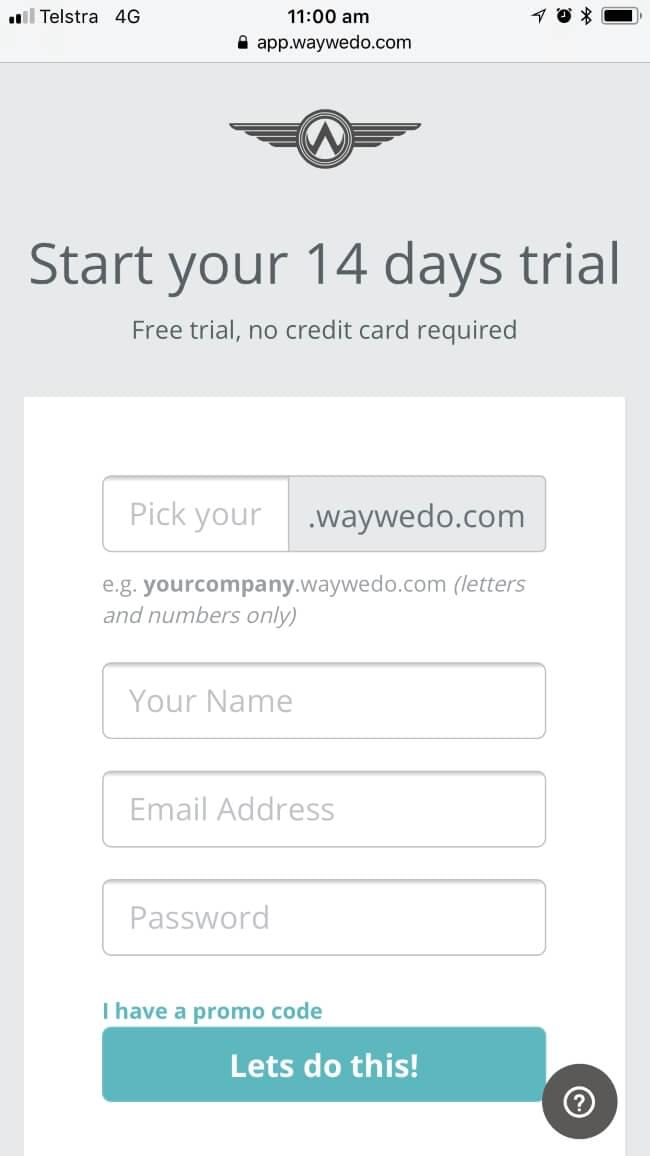

Platforms like Way We Do are playing a critical role in that transition.

“Way We Do enables governance teams to build AI-related policies directly into their workflow,” says its CEO, Jacqui Jones. “You can document a Responsible AI Policy, assign approval steps, and track compliance — all within a secure, role-based platform.”

For instance, a governance team might allow AI tools to generate initial drafts of internal meeting notes or minute shells — but require a human owner to review, revise, and formally publish the final version. Way We Do can route that process through a pre-defined workflow, recording approvals and storing documents with appropriate permissions and audit trails.

In addition, the platform can house policies around:

-

Consent for meeting recordings

-

Data retention and destruction

-

Version control for drafts vs. final minutes

-

IP attribution and usage rights for AI-generated content

-

Regulatory compliance with Section 251A and other related standards and regulations

Way We Do also supports role-based task assignments, so minute-takers, legal reviewers, and board chairs each have clear responsibilities in the approval process.

Building Literacy, Policy, and Trust

As the Governance Institute recommends, the key to responsible AI deployment lies in three pillars:

-

Governance frameworks that define purpose, risk, and accountability

-

Human oversight, ensuring AI is a tool, not a substitute for judgment

-

Security-first thinking, preventing data breaches and regulatory missteps

Training and AI literacy are also essential. Directors and governance professionals must understand not only what AI can do — but what it shouldn’t do. Over time, comfort will grow, especially if AI is deployed first in low-risk settings like management team meetings, training sessions, or policy reviews.

Let’s Agree: Human-Led, AI-Assisted

AI is here to stay — but its role in boardrooms must be carefully defined, controlled, and monitored.

Used wisely, it can streamline routine documentation and reduce administrative overhead. But when it comes to high-stakes decision-making, the human minute taker still reigns supreme.

As governance evolves, the answer isn’t to choose between AI and humans. It’s to design systems — like those offered by Way We Do — that let them work together, with each playing to their strengths.

Because when it comes to the official record of how your organization governs itself, the final word should still be written by someone who understands what was really said.

Source: Governance Institute of Australia (2024). “Artificial Intelligence (AI) and Board Minutes – Issues Paper.”

Additional insights provided by Way We Do.